Last Week in Science; 25th to 31st March

April 7, 2024

The Right Byte: Watchworthy

April 13, 2024The Race to Generative AI and the Need for Responsibility

Generative AI is a revolution not only because of its mind-blowing generation capabilities but more because it has brought AI accessible to masses. With this race picking up pace, companies are scrambling to be the first to harness its power. It’s a classic “prisoner’s dilemma” – everyone fears falling behind if they slow down, but breakneck speed can lead to cutting corners on ethics and safety.

The race for AI dominance isn’t just between big names like OpenAI and Google. Many companies are using solutions based on large language models (LLMs) to build custom applications that revolutionize their industries. Take for example, pharmaceutical giant Merck, which is using AI to analyze vast datasets of patient information and medical research. This allows them to identify patterns and trends that could lead to breakthroughs in drug development and personalized medicine. Imagine AI sifting through mountains of data to find the next miracle cure for cancer! This billion-dollar investment in “generative AI” shows just how seriously companies are taking the AI race, transforming how they operate with the potential for massive performance gains. The impact is spread across multiple sectors, take for example financials. Generative AI is sweeping across the financial services market like a tidal wave, with analysts at Market Research.biz predicting a meteoric rise from a mere $847.2 million in 2022 to a colossal $9.47 billion by 2032, reflecting a staggering 28.1% annual growth.

While these staggering figures and advancements are transforming the way of business, ethical challenges become even more pronounced. The generative AI models are inherently data hungry. They require trillions of words (tokens) and/or visual data to train and adapt their interaction. The way this huge dataset was pre-processed, refined and filtered becomes the foundation of presence or absence of ethical bias or intellectual property violation etc. A recent example is Google’s AI powered image generator – Gemini, came under rightful fire for being utterly unable to depict historical and hypothetical events without forcing relevant characters to be nonwhite.

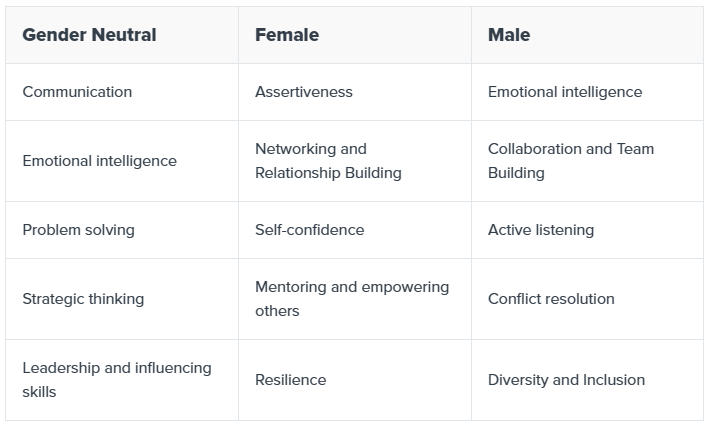

Another such example is from ChatGPT; when asked to list down the traits of an HR manager for a large organization, ChatGPT responded with following focus areas:-

These outputs fall back on stereotypical expectations. Men, despite being a smaller demographic in HR managers, are nudged towards improving diversity and inclusion. Women, on the other hand, receive prompts that emphasize assertiveness and self-confidence. Even without specifying gender, the underlying training data might still influence the tool to offer biased suggestions.

While there’s a commendable push towards ethically robust and unbiased generative AI systems, solely relying on this future ideal might be a risky gamble. The reality is, even with extensive research, achieving complete neutrality in complex AI models remains a significant challenge. This underscores the importance of promoting Responsible Use of AI alongside ongoing research efforts.

Consider a scenario where an AI system is designed to filter content on a social media platform. While the model itself may function flawlessly, irresponsible users could craft malicious prompts to bypass filters and spread harmful content. Similarly, a company analyzing customer sentiment through AI might misinterpret the results if the humans interpreting the data lack proper training. On the flip side, intellectual property violations can also stem from human actions. Someone might irresponsibly copy and paste AI-generated content for their website, essentially plagiarizing the source material the AI was trained on.

Let’s take an example of a tech company’s marketing manager, tasked with creating captivating captions for new product launches, irresponsibly pasted internal documents containing confidential details like product specs, marketing strategies, and even competitor analysis data into a popular online AI copywriting tool. This haste could lead to a data breach, exposing sensitive information to the AI provider and potentially third parties if their security isn’t robust. Even worse, the AI might unintentionally leak snippets of this confidential data within the generated captions, revealing crucial details to competitors through clever phrasing or specific word choices, jeopardizing Alice’s company’s competitive edge.

These examples highlight the crucial role of awareness, knowledge, and understanding limitations when it comes to Generative AI adoption within enterprises. Here’s how these elements come into play:

- Awareness: Enterprises need to instill awareness in the employees regarding the potential pitfalls of Generative AI, such as bias in outputs, security vulnerabilities from irresponsible data use, and the importance of human oversight.

- Knowledge: User (employees) need to understand how Generative AI models work, their training data sources, and potential biases. This knowledge empowers them to use the technology responsibly and critically evaluate its outputs.

- Limitations: Generative tools have their limitations and recognizing the limitations is essential. While these tools can be powerful for creative tasks and data analysis, they are not perfect. They can be fooled by malicious prompts, misinterpret data, hallucinate or fabricate facts.

- Explainability and Transparency: Understanding how a Generative AI model arrives at its outputs can be challenging. However, enterprises must explore tools and techniques for explainability to ensure trust in the Generative AI solution they have deployed to augment their technology portfolio especially if these tools are part of decision-making process.

- Regulatory Landscape: The legal and regulatory landscape surrounding AI is constantly evolving. Leadership needs to stay informed about relevant regulations and ensure their use of Generative AI complies with data privacy laws and ethical considerations.

- Human-AI Collaboration: Finally, Generative AI is most effective when used in collaboration with human expertise. Enterprises need to focus on fostering a culture of human-AI collaboration where AI augments human capabilities rather than replaces them.

Generative AI presents a transformative future, but navigating the ethical landscape is paramount. By fostering user awareness, knowledge, and a respect for limitations, enterprises can ensure responsible AI adoption. Prioritizing human-AI collaboration, explainability, and regulatory compliance will be crucial in building trust and unlocking the true potential of this revolutionary technology. The path forward lies in harnessing the power of AI for good, ensuring ethical considerations are not lost in the race for innovation.